You ask Claude to scaffold a new Astro project. It generates 50 lines of boilerplate. Uses context. Might have subtle issues you won’t notice until later.

Or: you teach your agent to run npm create astro@latest. Deterministic. Instant. 100% correct.

This is the first sign you’re using your agent wrong. If scaffolding exists, use scaffolding. If documentation exists, search documentation. The agent’s job is to find the right answer and use it — not invent a solution.

Adding an MCP server to Claude Code takes one command. Changing how your agent approaches problems takes intention.

The Invention Problem

When agents don’t know something, they don’t say “I don’t know.” They invent.

The problem is worse than it sounds. “Doesn’t know” really means the agent lacks enough context to reason correctly about the problem. Agents don’t have the self-awareness to tell you that. So they produce something plausible instead.

Invented solutions often work — just not the right way. A framework has three ways to do X: the current approach, the deprecated one kept for backwards compatibility, and the edge-case workaround. Your agent picks one. Probably not the one you want.

“Works but wrong” is worse than “doesn’t work.” Broken code fails visibly. Wrong code passes tests, ships to production, then causes problems you trace back days later. I’ve had situations where the agent wrote a hundred tests, all passing, and the feature was still wrong. Why? Because you said “write tests” without specifying what to test. The agent tested what it built, not what you needed.

Without documentation access, you become the search agent. Hunting down docs. Re-engineering the context. Prompting links you already gave yesterday. You can do it — like a manager bringing pizza for the dev team — but it’s not the best use of your time.

What if the agent searched first?

Adding Ref MCP to Claude Code

One command unlocks the workflow:

claude mcp add --transport http Ref https://api.ref.tools/mcp --header "x-ref-api-key:YOUR_KEY"Run this in your terminal, then launch Claude Code. If Claude Code is already running, restart it (like with any MCP change). Done.

What Ref does differently from just pasting docs:

- Precision retrieval — returns the most relevant snippets, not everything

- Partial extraction — the relevant part of the class reference, not the whole page

- Context-efficient — agent drives the retrieval, gets only what it needs

Ref works like a normal RAG system: your agent queries it, Ref returns top relevant articles, and the agent expands on those if necessary.

Now when I ask Claude to implement Astro content collections, it searches first. The proper solution instead of an invented one.

Pricing note: Ref is a paid service. As of February 2026, the Basic plan is $9/month for 1,000 credits. There’s also a free tier with 200 starter credits that don’t expire. For most individual developers, 1,000 credits per month is more than enough — you’d have to be actively investigating something or working as a team to hit that limit.

Enforcing Doc-First Behavior

Config alone isn’t enough. You encode the behavior in CLAUDE.md.

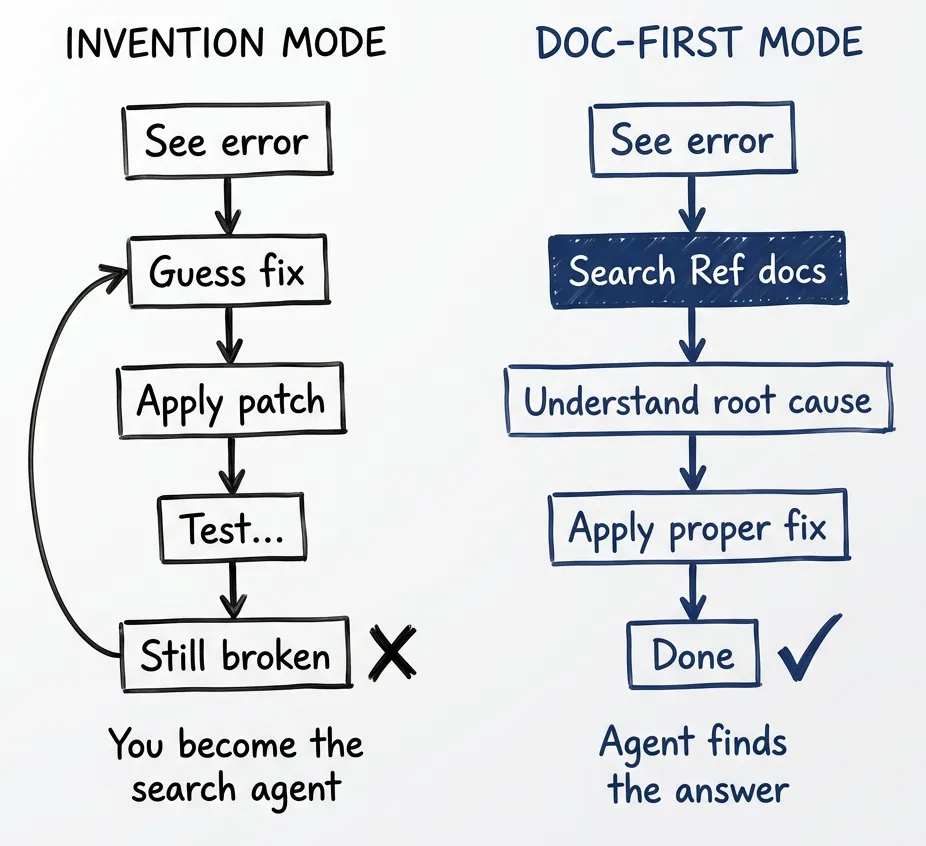

Anti-pattern (FORBIDDEN):

❌ See error → Guess fix → Apply patch → Hope it worksRequired pattern:

✅ See error → Search Ref docs → Understand root cause → Apply proper fixWhat to search for — not just “documentation”:

- Documentation — how the API works

- Examples — how others use it

- Best practices — the recommended way, not the backwards-compatible way

Why all three? Frameworks offer multiple approaches. Some are deprecated. Some are for edge cases. New code should use the current recommended pattern.

From my demo project’s CLAUDE.md:

You MUST use Ref MCP to search documentation...

NO monkey patching — find the proper solution...

Update the ticket with findings.From a larger production project:

Systematic Debugging: For bugs, invoke `/systematic-debugging` skill

BEFORE fixing. Research docs with `ref_search_documentation`.

Fix at correct layer — don't patch symptoms.The principle is the same. Search first. Understand the root cause. Then implement.

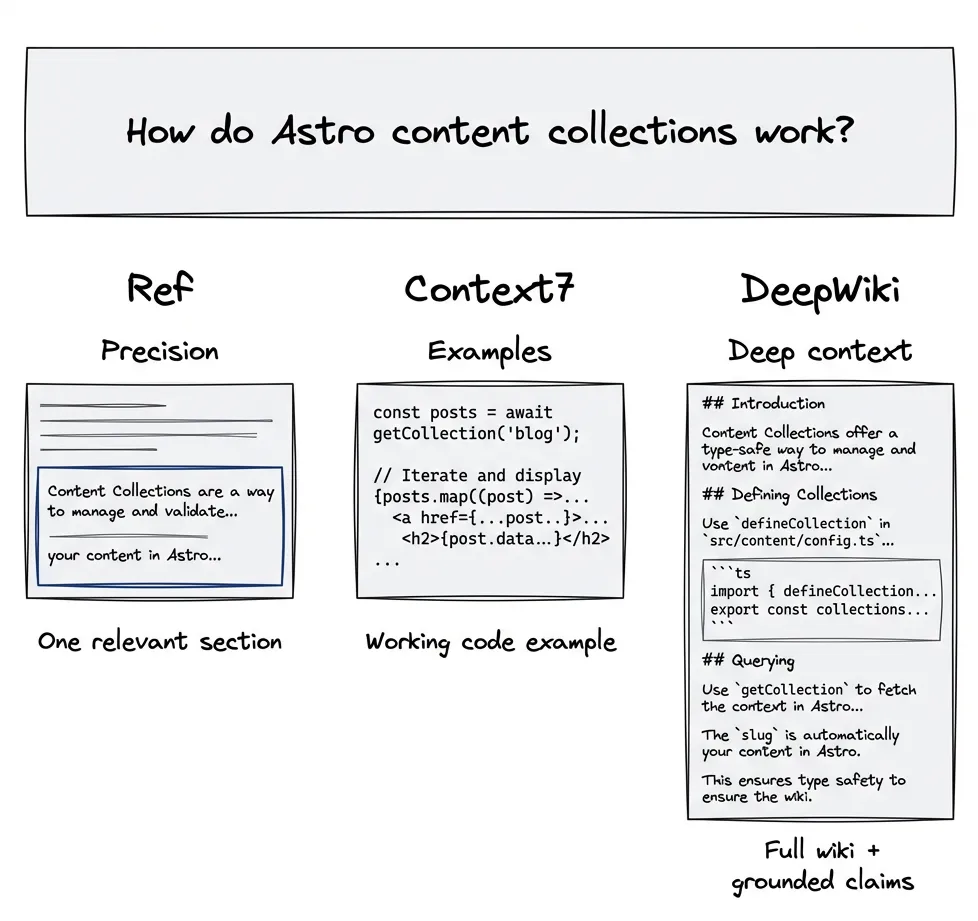

Ref vs Context7 vs DeepWiki

Different tools, different philosophies. Pick based on your needs.

Ref

Philosophy: Precision over volume.

Best for precise answers, specific API questions, error lookups. Returns the most relevant snippets and lets the agent expand if needed. More effective than general web search because documentation has already been processed for agent consumption.

Setup command shown above.

Context7 (by Upstash)

Philosophy: Show, don’t explain — real code examples.

Best for pattern-based learning, seeing how things work in practice. Returns working code examples, version-specific. Include “use context7” in your prompt to trigger it.

claude mcp add context7 -- npx -y @upstash/context7-mcpDeepWiki (by Cognition)

Philosophy: Comprehensive understanding through AST parsing.

Best for open source repos, understanding unfamiliar codebases. Builds wiki from source — every claim grounded in code. Covers 30,000+ popular repos with 4 billion+ lines indexed. Free, no API key needed. Private repos require a Devin account.

My take: You need all levels — mental models, how-tos, and code examples. Ref handles the precise lookups. DeepWiki handles the deep dives. Context7 fills the middle if you prefer pattern-first learning.

Combinations that work:

- Ref alone — most common, covers 80% of cases

- Ref + DeepWiki — precise lookups plus deep understanding of OSS dependencies

- Context7 + DeepWiki — if you prefer pattern-first approach

- Ref + Context7 — coverage gaps exist; use Ref for precision, fall back to Context7 for examples when Ref doesn’t have the library

Cost note: Context7 is free for public libraries (1,000 requests/month, no API key required). Ref’s free tier is 200 credits. If you’re cost-conscious, Context7 for examples plus Ref for precise lookups is a valid strategy.

When Ref Isn’t Enough

RAG-on-demand has limits. Know them.

Version Mismatch

You’re on library v2.3. Ref returns v3.0 docs. Agent figures it out eventually, but not a straight path. Solution: specify version in your queries, or accept the exploration cost.

Project-Specific Decisions

You chose LangChain over LangGraph because your project is small and you’re more comfortable with the API. Ref can’t know this.

Solution: describe your decisions in CLAUDE.md. Your guardrails steer the agent to query more precisely.

Correctly steering with guardrails increases retrieval accuracy — not because Ref is smarter, but because your agent queries it more precisely.

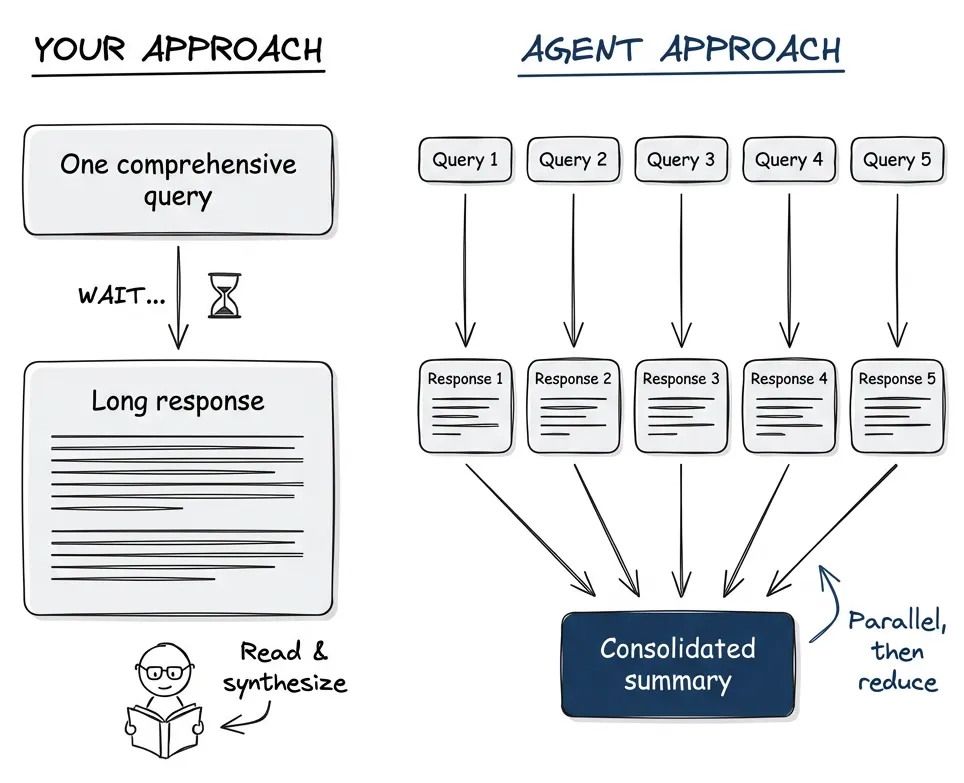

The Map-Reduce Pattern

When you need to research something, you naturally craft one comprehensive query, send it off, wait for the deep research to complete, then spend time reading and synthesizing the result. That’s your workflow — optimize the query, minimize round trips, read carefully.

Agents work differently. They can send five precise queries in parallel, get five focused responses, and consolidate the results before you’d finish reading the first one. They don’t get tired reading. They don’t need coffee breaks between search results.

Example: You want to understand how authentication works in a framework.

Your approach: “Explain authentication in Framework X including JWT handling, session management, middleware patterns, and best practices” — one query, wait, read a long response.

Agent approach: Five parallel queries — “Framework X JWT setup”, “Framework X session middleware”, “Framework X auth best practices”, “Framework X token refresh pattern”, “Framework X protected routes” — five focused responses, consolidated into one summary.

The agent’s approach is faster because it can parallelize and doesn’t need to comprehend while reading. Mental model: map-reduce for your brain. You define the pattern (what to research), the agent maps it across multiple queries, then reduces the results into what you need.

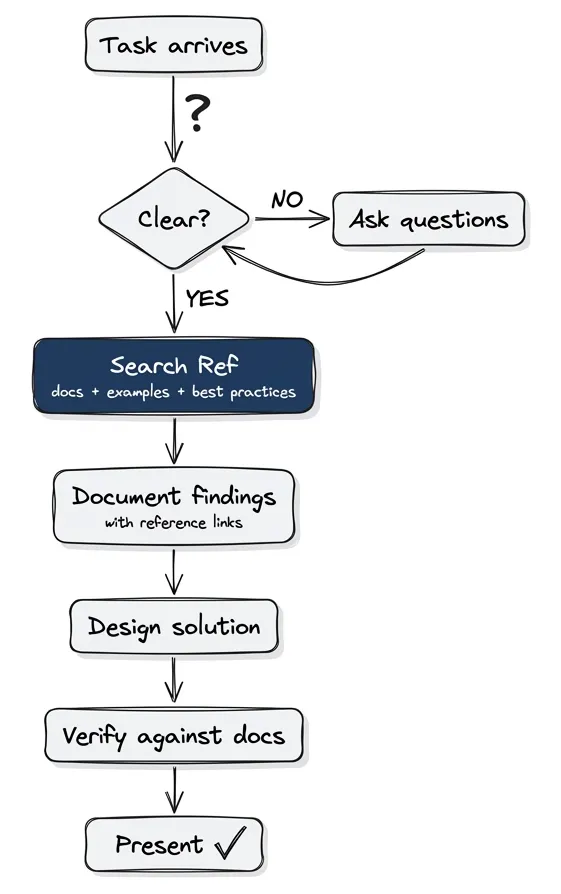

Building a Research Workflow

Documentation search often goes with planning. Create a slash command.

The instructions to the agent follow this sequence:

- Ask questions unless requirements are completely clear

- When clear, use Ref for best practices and relevant APIs

- Document findings with reference links

- Suggest design

- Verify design follows best practices

- Present solution

Reality check: some steps are hard to enforce strictly. This is guidance, not rigid enforcement. Run it, observe behavior, ask Claude why it skipped a step, improve the instructions.

Different modes need different workflows:

- Planning mode — research + design

- Documentation mode — writing/updating docs

- Implementation mode — executing the plan

- Testing mode — quality verification

A single /research command that encodes your research workflow saves re-explaining every time. (Part 3 covers how to create custom commands.)

The Before/After

Same task, different outcomes.

Without Ref (Copilot Mode)

- “Implement content collections in Astro”

- Agent writes code from memory — maybe stale by months

- Uses deprecated

defineCollectionsyntax - Works, but throws warnings

- You Google it, find the current approach, paste docs, ask for rewrite

- You became the search agent

With Ref (Agent Mode)

- “Implement content collections in Astro”

- Agent searches Ref: “Astro content collections best practices”

- Finds current syntax, recommended patterns

- Implements correctly the first time

- You verify output, not research

The difference: your context-switching versus none.

Start Here

If you implement one thing from this series, make it this. Documentation access through MCP servers is the foundation for effective Claude Code workflows.

You can make your agent noticeably smarter and your workflow more enjoyable. One command to add Ref. A few rules in CLAUDE.md to enforce the behavior. Maybe a /research command to encode your planning workflow.

The agent already knows how to search. You just have to tell it that searching is mandatory — and where to search. Ref is more effective than general web search because the documentation has been processed for agent consumption. The agent gets focused answers, not ten blue links to parse.

Blueprint repository: github.com/postindustria-tech/agentic-development-demo — includes the CLAUDE.md patterns for doc-first behavior described here.

Second in a series on agentic development with Claude Code. Previously: Stop Using Claude Code Like a Copilot. Next: deep dives into skills, verification patterns, and persistence.

Related: If you want to understand how to build skills that encode this kind of expertise, see The Agent Skills series. For why context management matters for security, see I Broke a 43K-Star AI Assistant in 90 Minutes.