By failing to prepare, you are preparing to fail.

Benjamin Franklin said that. Maybe a Roman general. Either way, everyone nods along — then picks up the phone with nothing but a name and a prayer.

We don’t do that.

In technical sales, we spend 10x more preparing for a call than making it. AI handles the preparation. But the call itself? That’s a human.

This isn’t about technology preferences. It’s math. And philosophy.

The Technical Sales Philosophy

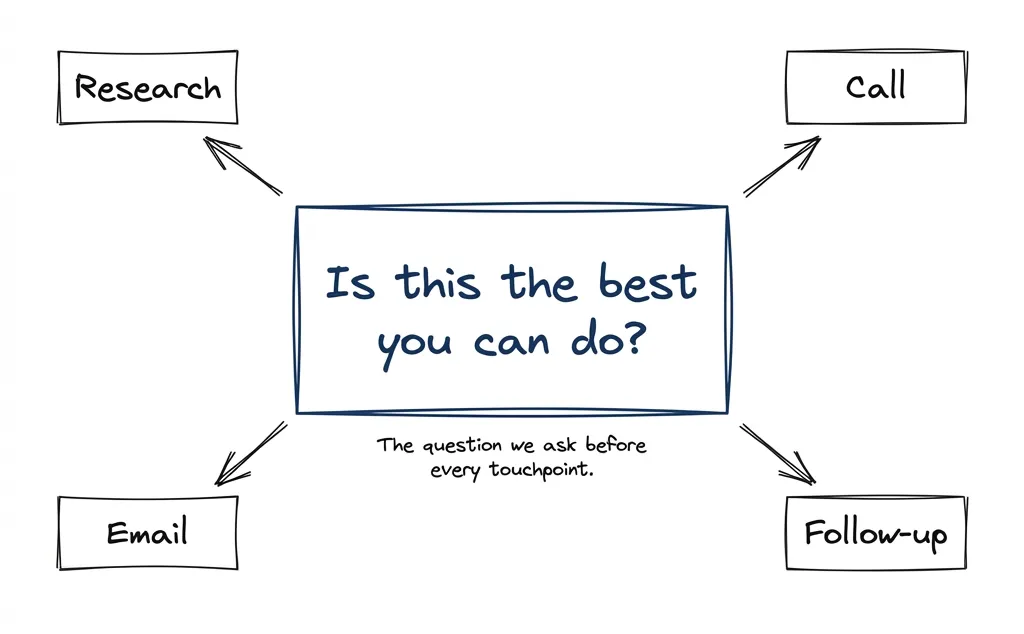

Here’s what I believe: with AI-based outreach, our job is to make maximum possible effort — to the best of our ability, prompting knowledge, data, everything — to reach people only where we believe there’s a high probability of success.

Contrary to salespeople who spam, we don’t have huge markets to blast. So we do extensive preparation to be as relevant as possible.

This serves everyone. It’s in the prospect’s interest that we don’t waste their time with irrelevant calls. It’s in our interest because we want to be effective. Did you prepare this outreach in the best possible way? Did you do this job to the best of your ability?

Because here’s the reality: you get one shot. Maybe a few shots if you count the 90-day cooldown before re-engaging. You have to use them well.

The Math

By the time someone lands on our call list, we’ve spent real money:

- Classification prompts to filter company type

- Segment analysis to match our portfolio

- Deep research on their situation

- People enrichment to find the right contact

- Signal detection for timing

- Email and LinkedIn to warm them up

Maybe $5-10 per qualified prospect. Weeks of work compressed into a phone number.

The call? Pennies. Human or robot — negligible difference.

So why cheap out on the only part that matters?

If they pick up, we get one shot. After all that work finding the right person at the right moment, I’m not sending them to a robot.

We’re Not Selling Insurance

If your offer is typical — “list your property on our marketplace” — AI calling can work. Standard script. Predictable questions. No persuasion needed.

Airbnb probably can call potential hosts automatically. Same offer for everyone. Makes sense.

But we sell B2B services — custom software, engagements starting at six figures. Every prospect has different problems, different contexts, different reasons they might care.

High variance conversations. Prospects ask technical questions. They mention competitors. They want to know: why now? Why you? Why this approach?

Current AI handles this okay. “Okay” isn’t good enough when you spent weeks finding this person.

The AI Cold Calling Latency Problem

There’s a technical reality people skip over.

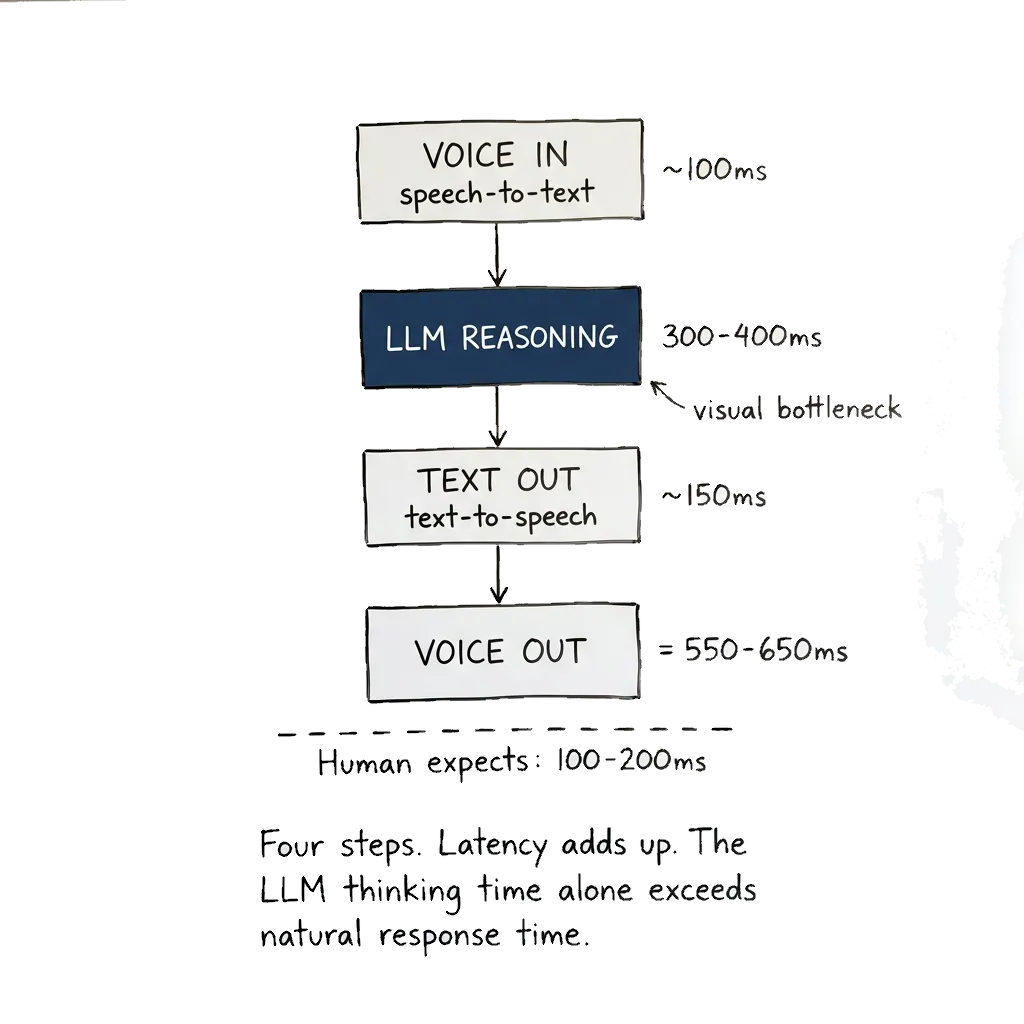

Most voice AI agents work like this: your voice gets transcribed to text, that text goes to an LLM with context, the LLM reasons through a response, then that response gets converted back to speech. Four steps. Latency adds up at each one.

If you want natural-sounding speech (not robotic), your text-to-speech phase alone adds considerable time. And if you want the LLM to actually think — not just pattern-match — you’re looking at 300-400 milliseconds minimum for reasoning.

Voice-to-voice models exist, but you lose the ability to steer the conversation with nuance. Pick your tradeoff.

Normal conversation? 300-400ms is an eternity.

Human speech has rhythm. Someone finishes a sentence, they expect a response in 100-200ms. Anything longer feels off.

In high-pressure sales — navigating objections, building trust in real-time — that delay kills you. The prospect pattern-matches to “robot.” Trust calculation changes instantly.

High-volume, low-value outreach? Maybe acceptable.

$100k+ services conversation? No.

What We Actually Do

If not AI for calls, then what?

Everything else.

The Research Agent

We built what we call a “sales agent” — Claude Code with access to:

- Perplexity for web research

- OpenAI for structured analysis

- HubSpot for CRM data

- Slack for context on existing relationships

The job: build a complete dossier on every organization and person we’re calling.

Not company description and LinkedIn summary. We look for pressure signals — reasons this company might need us right now.

What counts as a pressure signal:

- Leadership gave an interview two months ago about new systems

- Construction firm won a public contract but lacks field management software

- Competitor launched something that shifts their market

- Regulatory changes hitting their industry

- Hiring patterns that suggest expansion or gaps

This stuff doesn’t show up on Google page one. You have to connect dots across sources. Reason about what it means for their business.

This is what we’re teaching AI to do. Thinking. Not talking.

I can’t claim this is a proven recipe that works like a charm. We’re figuring it out, constantly. But I believe this approach — systematic research before any outreach — represents what more people should be doing.

The Playbook

Raw research isn’t enough. You need to know what to do with it.

We keep a playbook — YAML file mapping triggers to actions. When the research agent spots a situation, it matches to the playbook and recommends an approach.

Fundamentally, this playbook is how you think about triggers in your customer’s buying journey and what you should be doing about them. What might your buyers want? How do you track their behavior? What do you do to reactivate them? That’s the starting point. It varies by industry — though honestly, we don’t vary ours enough yet.

We took a shortcut. B2B service deals generally follow similar triggers. We used the Challenger Sale methodology — the stuff about champions, mobilizers, leading with insight. Vibe-created a first version, reviewed it quickly, and iterated from there.

Here’s what a trigger could look like:

- id: new_cto_vp_eng

name: "New CTO / VP Engineering"

priority: "CRITICAL"

why_it_works: |

New leaders have budget, mandate, and urgency. They need to

diagnose before prescribing but face pressure to show roadmap

in 90 days. They inherited problems they didn't create.

challenger_insight: |

Most new CTOs waste their first year firefighting instead of

building because they prescribed before diagnosed. Your

predecessor had a vendor. If that vendor was doing their job,

you wouldn't have inherited this mess.

opening_move: |

Congrats on the new role. You're likely inheriting a roadmap

you didn't write and a tech stack you didn't build. Most new

CTOs spend year one firefighting legacy debt instead of

shipping. We run a 2-week Technical Due Diligence Sprint—

clean-slate diagnosis before you commit to the board.

killer_question: |

When you present your 90-day plan to the board, will it be

based on your team's optimistic estimates, or an independent

audit of the code reality?This example uses Challenger Sale methodology, but the framework could be anything. What matters more: it’s what you think your buyers might want and how you respond to their signals.

The playbook has dozens of triggers. API outages. Funding rounds. Leadership changes. Expansion signals. Regulatory pressure. Each with specific angles, openers, and questions.

The Fact-Check

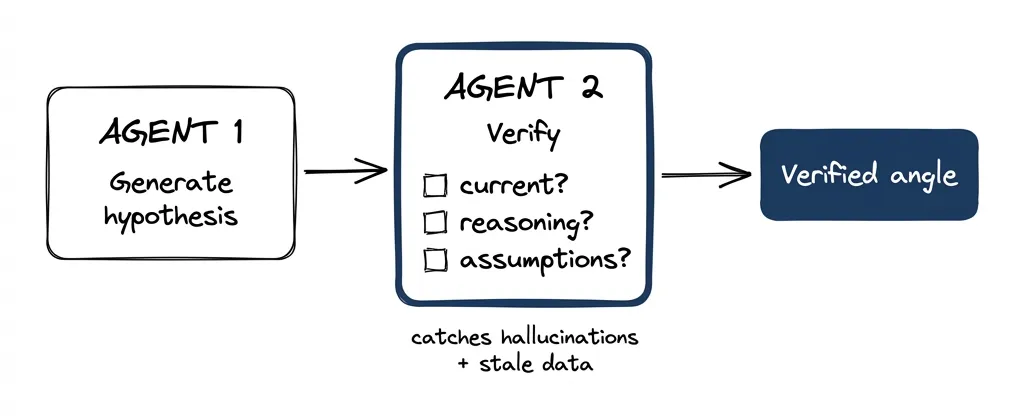

One agent generates hypotheses — “this company might need us because X, try angle Y.”

A second agent tears it apart. Checks:

- Is the trigger actually current? Not 18-month-old news?

- Does our reasoning hold?

- Are we assuming things we can’t support?

Only verified angles reach the salesperson.

This two-agent pattern — generate, then verify — catches hallucinations and stale data. The stuff that would embarrass us on a call.

What Comes Out

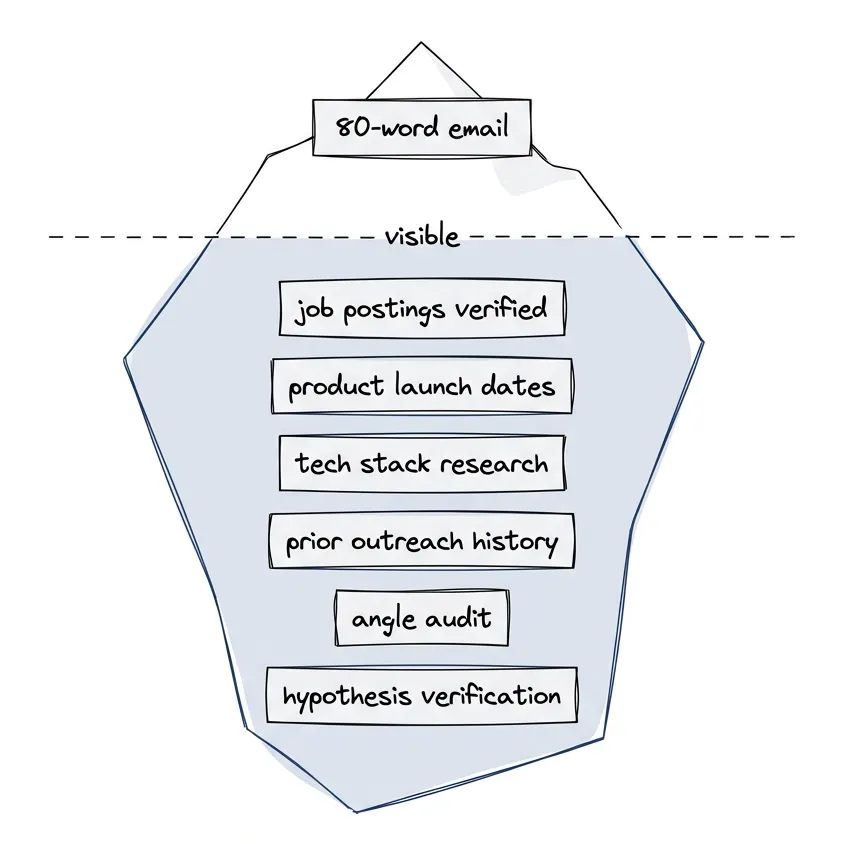

Here’s an example of what this research produces — a real email draft (company details redacted):

[Prospect],

Noticed you’re hiring a Sr. Director, Head of Engineering while [Product] is four weeks into market. That’s a lot of decisions for a new leader to inherit on day one.

Your team shipped Databricks integration, multi-cloud pipelines, and a self-service platform — solid execution. The question is whether that architecture is ready for the AI adoption and enterprise scale your job posting calls for, or if your new leader will spend Q1 auditing instead of building.

Would a fresh architecture read help them hit the ground running?

80 words. Behind it: verified job postings, product launch dates, technical stack research, prior outreach history, and an audit of what angles we’ve already tried.

The SDR sees a condensed version of this context. When they call, they’re not improvising — they know why this person, why now, what angle.

Re-engagement

Same principle. When someone goes quiet, we don’t send “checking in” after three weeks.

We look for what changed.

Generic re-engagement (everyone does this):

- “Saw you have a job posting…”

- “Noticed your funding announcement…”

What we do:

- “I saw a merger between X and Y — they’re in your space. How does that affect your Q2 plans?”

- “The new regulation drops in March. Are your clients changing priorities?”

Better conversations. The prospect engages differently because you’re discussing their world. Not yours.

We Tried AI Cold Calling. It Failed.

Full disclosure: we experimented with AI for calls.

Hypothesis: validate which numbers actually pick up.

Built a system with Twilio and text-to-speech. Call everyone. If they answer, confirm identity with a simple question. Record who picks up. Build a “validated list” with near-100% connection rates.

Then humans call the validated list. Efficiency without AI doing the pitch.

Result: same 10-15% connection rate as always. Validated numbers performed no better than the original list.

I still don’t fully get why. Number reputation? Timing? Who knows.

Pulled the plug.

As for AI doing the actual pitch? Never tried it. I couldn’t figure out how the prospect would feel valued talking to a robot.

“You’re important enough for extensive research, but not important enough for a real conversation.”

That’s what sending a robot says. The choice of medium communicates something beyond the words.

Where AI Actually Helps: Training

Here’s where AI voice does make sense for us: roleplay.

I firmly believe roleplay is the single best booster of pitch quality you can give your team. No matter their experience level. When you have ten seconds to express why you called, preparation gives you the material — but training makes you effective.

We use AI roleplay especially when rolling out a new offer. Set up scenarios, run them ten times, be as embarrassing as you want. No one’s watching. No judgment.

Contrary to the belief that these tools cost millions: in 2026, you can set this up with Gemini Flash voice-to-voice and an elaborate prompt. Code it in AI Studio in twenty minutes. The technology isn’t the hard part. The hard part is how you prompt it, how you set up the scenario — which again comes back to understanding your segment and your customer.

If the AI responds in 400 milliseconds during training, who cares? The point is putting your SDR in situations as close to reality as possible. That it works.

The Future of AI in Technical Sales

Will this change? Probably.

AI voice improves fast. Latency will shrink. Someone will crack this.

But here’s my bet: by the time AI can truly handle high-stakes sales conversations, everyone will know it’s AI. The uncanny valley will flip. “AI caller” will be like “AI-generated art” — technically impressive, emotionally hollow.

When I’m wrong, I’ll switch. Not ideological about this. Practical.

But not yet. Most companies racing to automate calls are ignoring where AI actually creates value: the thinking before you dial.

Takeaways

In high-ticket technical sales, your call is only as good as your preparation. AI makes preparation dramatically better — finding signals you’d miss, connecting dots, verifying reasoning before you act.

But when they pick up? That’s on you. Human judgment, adapting in real-time.

My approach to this is systematic and technical. You’re reading a blog from a technical CEO. If that’s not your style, that’s fine.

We don’t use AI cold calling. We use AI to make every technical sales call count.

If they pick up, I want my best shot.